An open-source alternative to GitHub copilot that runs locally

As an enthusiastic programmer, I am always eager to explore ways in which AI can enhance my coding skills and efficiency. A while ago, GitHub introduced Copilot, an AI-driven coding assistant capable of completing code snippets, writing unit tests, and explaining code concepts. This innovative tool undoubtedly has the potential to revolutionize a programmer's workflow. However, Copilot is not free; it relies on OpenAI models, making it less than ideal for those seeking privacy in their coding environment.

Fortunately, there exists an open-source alternative that boasts all of Copilot's features and operates locally on your machine. Let's delve into the world of Twinny and discover its potential.

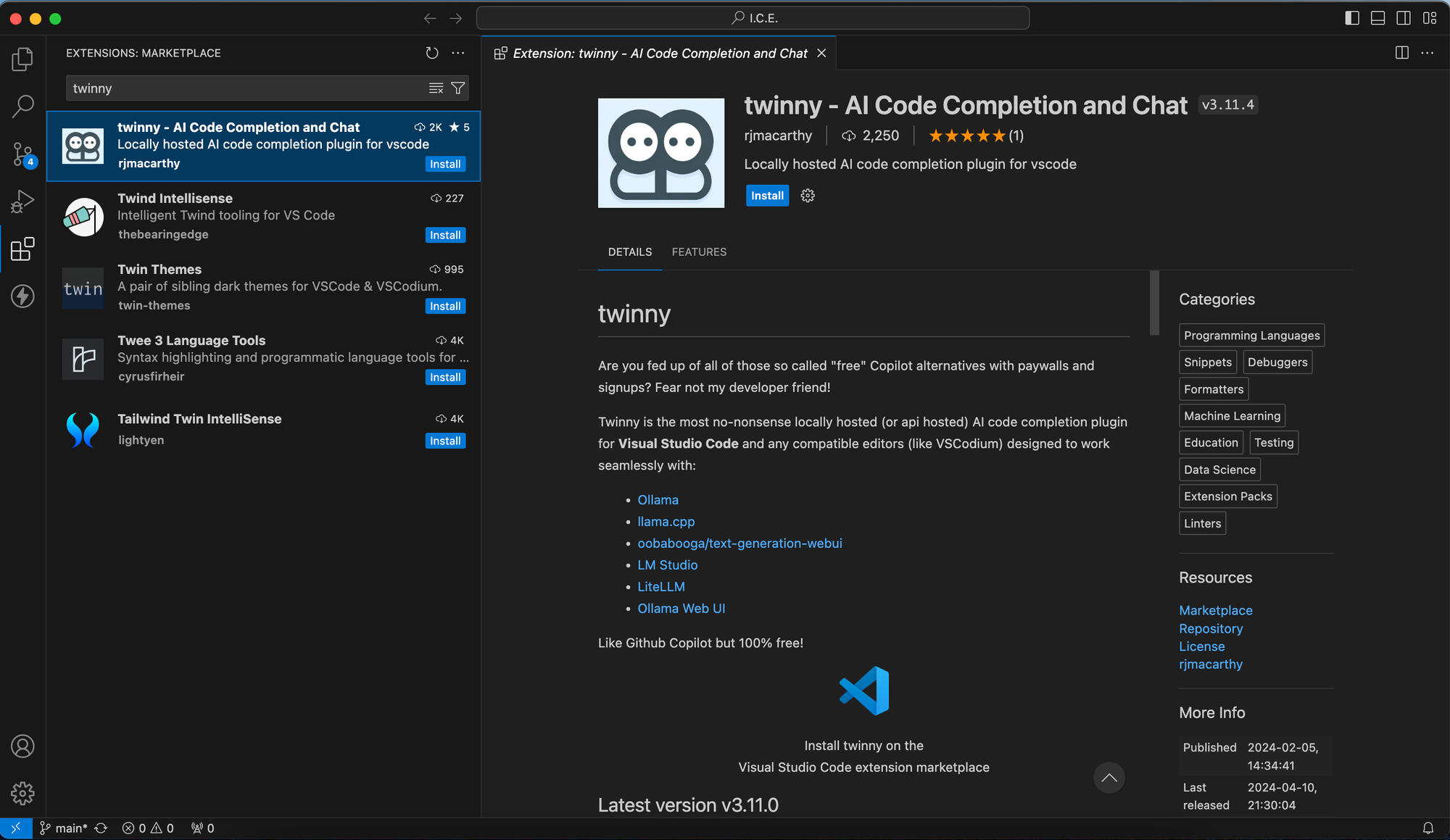

Twinny

Twinny is an open-source alternative to GitHub copilot that runs locally. It is available for Visual Studio Code - or what I use instead - for the open-source alternative VSCodium. In either way, you just search for "twinny" in the marketplace and download it.

We will use Twinny together with Ollama. If you already installed Ollama you can skip the installation procedure.

Install Ollama

Ollama is a piece of software that allows us to deploy and use Large Language Models locally on our machine. We will download Ollama using their website. Just choose your OS, download and install it.

Download Models

After we installed Ollama we now need to download some models we want to use. This is a list of all supported models Ollama has to offer but here are my recommendations for the use with Twinny:

openchat- We will use this model for chatting about our code, debugging and brainstorming.deepseek-coder:1.3b-base-q4_1- We will use this model for code completion.

To use each of these models we first need to download them. Therefore just use the following commands.

# openchat

ollama run openchat

# deepseek-coder:1.3b-base-q4_1

ollama run deepseek-coder:1.3b-base-q4_1There is no need for using the models above. At any point you can just switch to the ones, that better fit your needs.

Configure Twinny

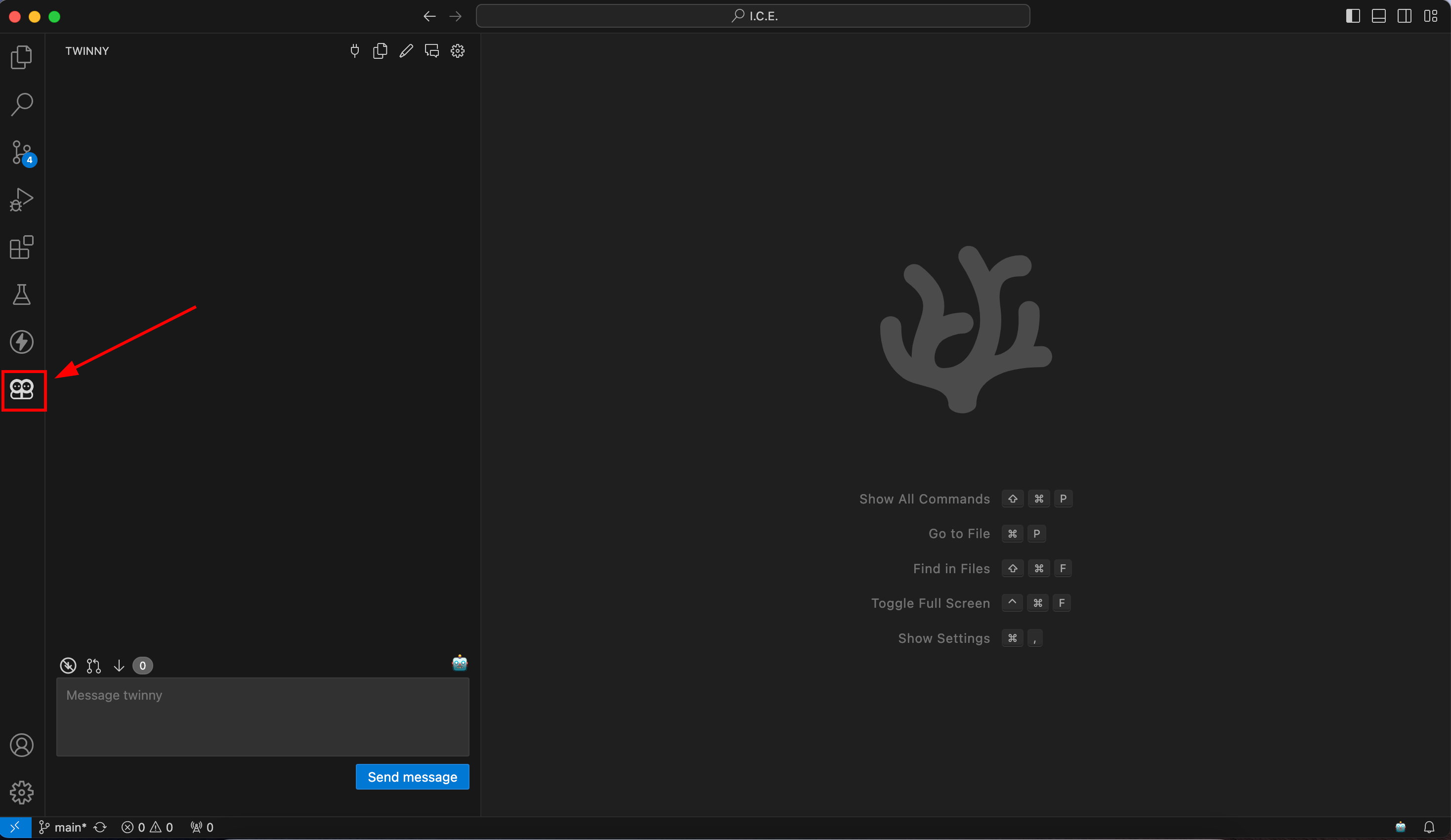

After we installed Twinny and our models we can now continue the setup. Therefore, click on the Twinny icon on the left side.

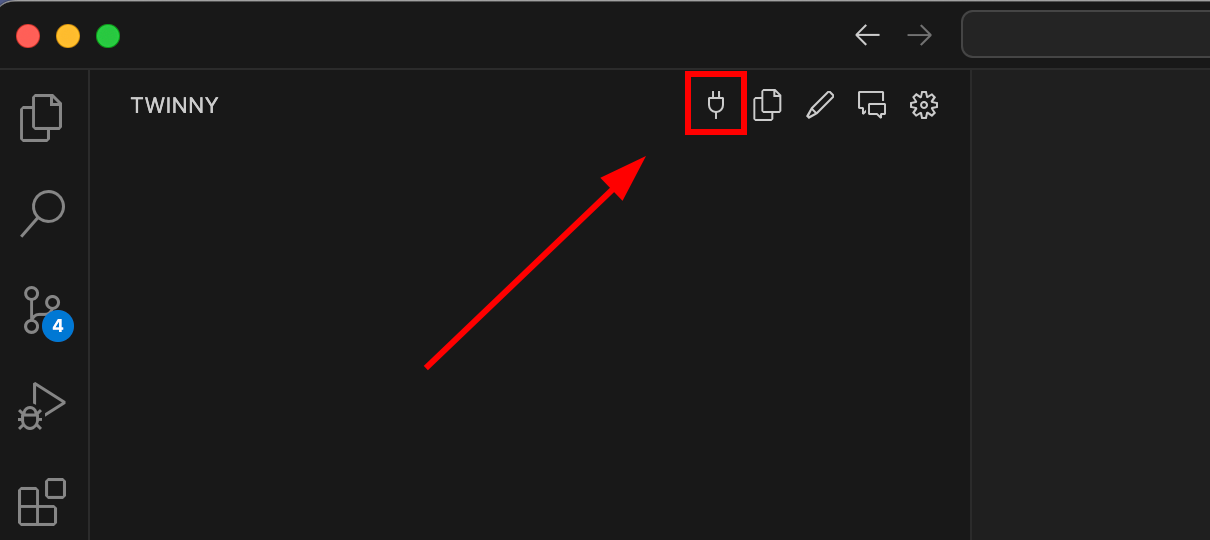

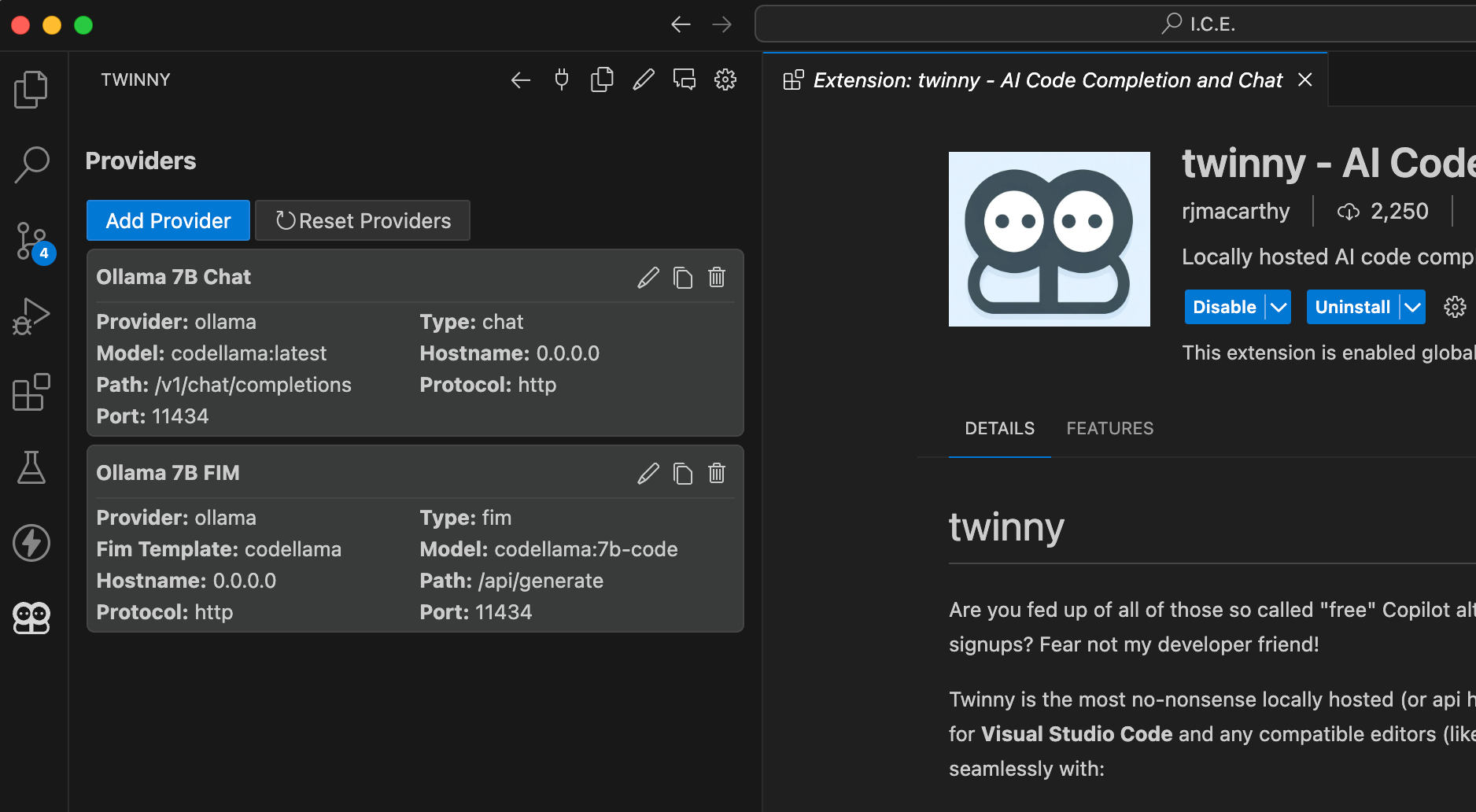

Now we need to tell Twinny which models it should use. To do so, click on the small plug icon to open up a provider overview.

A provider is a model configuration Twinny uses to perform different kind of tasks. We will update both provider to use openchat as chat model and deepseek as completion model. However, you can change and update these provider at any point to use bigger and better models.

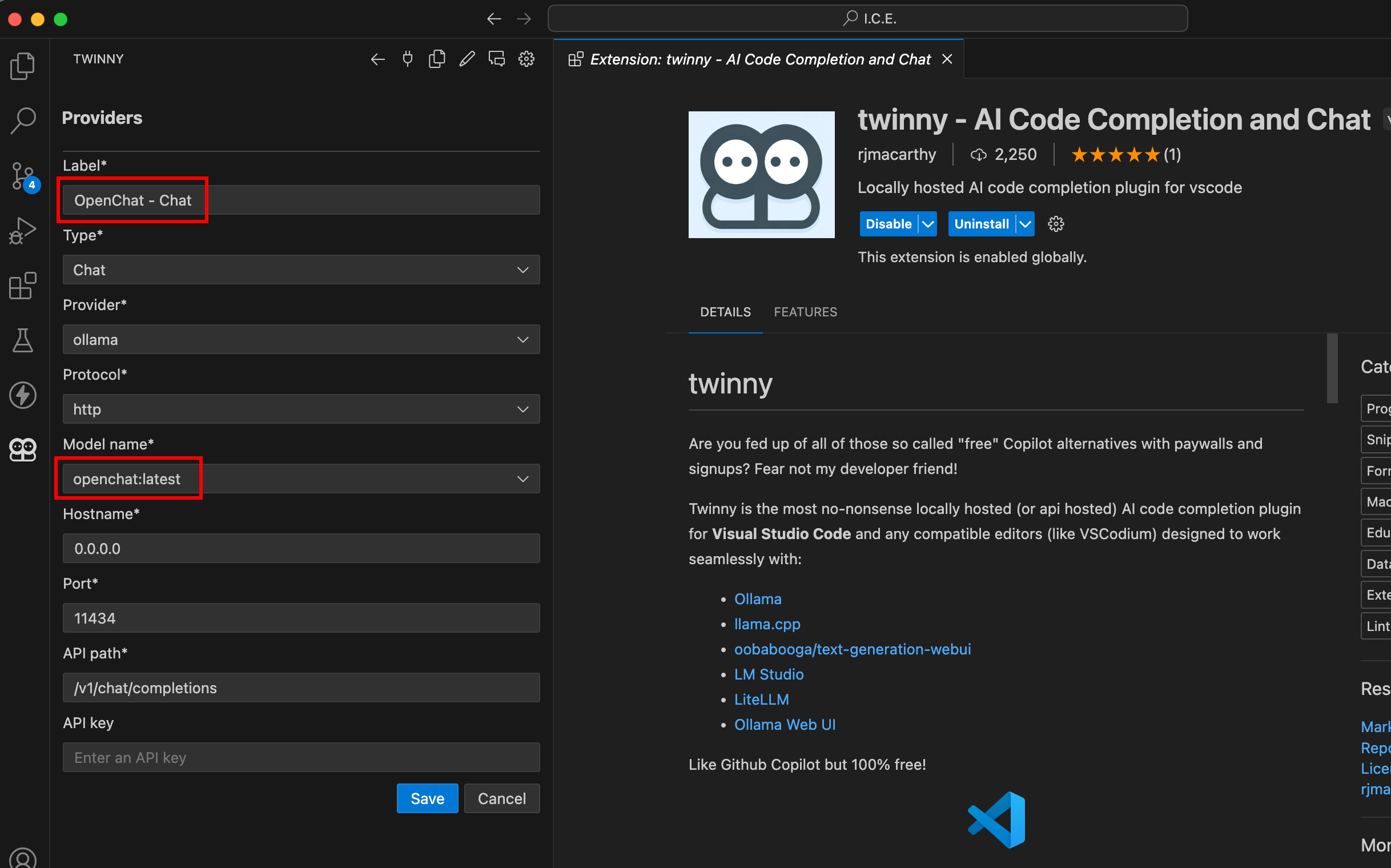

To update the first provider Ollama 7B Chat click on the pencil icon and make sure to copy the settings from below:

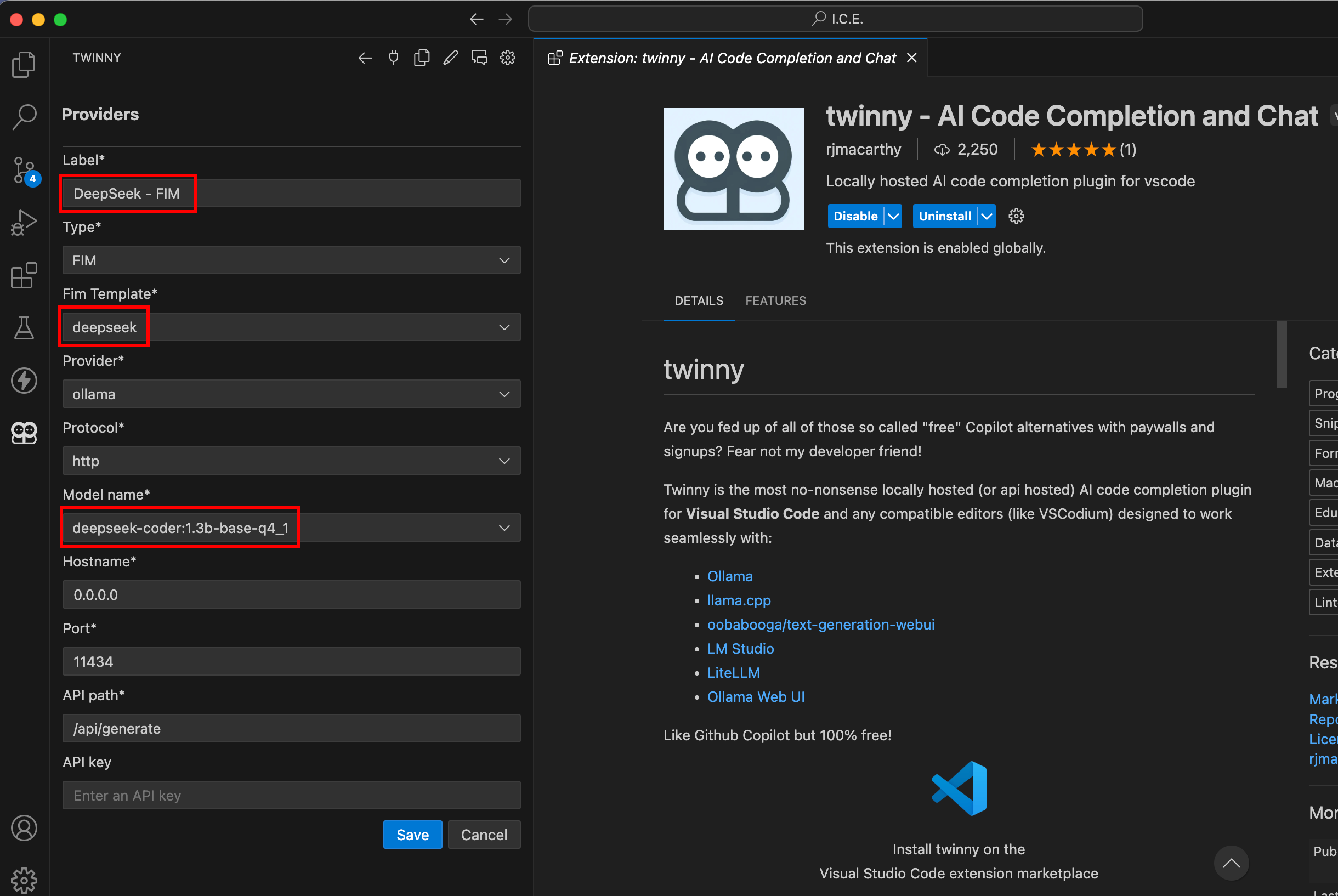

After that save the model card and do the same for Ollama 7B FIM. FIM stands for "Fill In the Middle" which basically means that for predicting the current line of code n lines above and below will be taken into account.

With our providers being set we can now start using Twinny for coding...

Fill In the Middle completions

The following video is an example of how you can use Twinny to assist you during coding. Obviously it isn't perfect. However, it is running completely offline on your machine.

Also if you want to have multi-line predictions make sure to enable Use Multi Line Completions in the extension settings.

FIM Completions

Debugging

The code we generated in the prior video has an obvious error. We're missing the requests library. So let us use Twinny to find and fix this error using the chat completion.

Debugging

Code Explanation

Twinny has some for features. One of them being code explanation. The following video shows how to trigger code explanation for a specific code snipped.

Code Explanation

Code Documentation

As the last showcase I chose the documentation feature of Twinny. The following video provides a short example on how to use this feature for a specific code snipped.

Code Documentation

Conclusion

At this point we discussed some key features of Twinny - the open-source alternative to GitHub copilot. While writing this article I played with Twinny quite a bit and I must say, even though it isn't perfect it can help a lot. Especially for simple tasks this is just amazing.

I hope you enjoyed this post and if you want to know what else you can do with your local Ollama installation check out my last post on setting up your own chat interface using Open-WebUI.