Introduction to Neural Networks - The Simple Predictor (Part 1)

Welcome! Today we begin a new series of articles on the basics of neural networks. This series will continue over the next few months as we dive deeper and deeper into this interesting world of artificial intelligence. In this very first post, we will talk about the general idea of intelligent systems and what their strengths and weaknesses are.

This series is based on the great book Neuronale Netze selbst programmieren.

The idea behind intelligent systems

For many years now people try to figure out how our brains work and how we can transfer these capabilities to machines. The idea is pretty simple: Let a computer simulate our brain to accomplish similar results. But the reality shows that this is not as easy as it sounds.

Easy for me - Hard for you

Computers basically are huge calculators that are very good at performing arithmethic operations. That's why we initialy used them for calculating, data analysis and visualization or communication. But people are lazy. So they looked for daily tasks that could be automated by a machine. Unfortunately, we found that tasks that are very easy for us, such as recognizing objects or speaking in natural language, are very difficult for computers. Take the following example.

For us it is very easy to understand the setting of the image. We see an ape sitting in the jungle who is looking directly into the camera. Although, it is easy for us, it is very hard for a computer to extract the same information from that image. But what if we switch domains? Let's take a look at the following equation.

It takes us quite a while to work out that the sine of 2π is 0 and that the logarithm to the base 10 of 1000 is 3, so the result of this equation is 0. A computer, on the other hand, only needs a few milliseconds to calculate the result.

Neural Networks

One successful attempt to model the human brain is the so-called "neural network". This is an algorithm that imitates our brain and enables machines to follow the same "thought process" as we do. They consist of so-called "neurons", which are simple mathematical functions that perform a specific task.

One neuron alone is not particularly powerful, but in a neural network there are thousands, which allows them to do incredible things. We will look at neural networks in more detail in one of the upcoming posts, but for now we want to focus on the smallest building blocks. The neurons.

Neurons

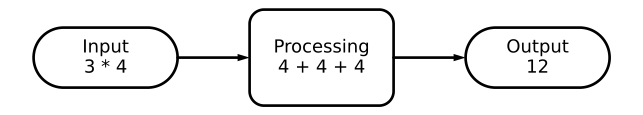

Let us begin by imagining a neuron as a simple input-output-machine. We provide some input, the neuron does some processing and outputs the results.

The task we want the neuron to perform could be anything. We will start with a very simple example. Let's assume we want the neuron to calculate a simple multiplication statement. The machine would look like this.

The neuron takes the statement as input, caculates the result and provides it as output. Since this is a basic example we now want to increase the complexity of the task.

Train a neuron

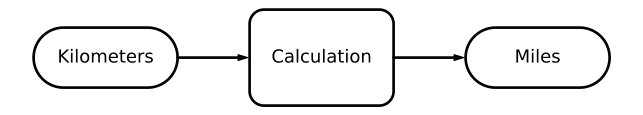

Now we want our neuron to be able to take kilometers as input and converts them into miles. This is still a basic computation task. Let's have a look at the diagram.

But here comes the twist. We assume that we do not know the exact relationship between kilometers and miles. Instead we want the neuron to learn it.

To make this happen, we assume that the relationship is linear. So let's have a look at the equation:

The constant c represents our missing knowledge about the relationship between kilometers and miles. The goal is that the neuron finds c by its own. However, we must provide some data hat the neuron can use for orientation. Therefore we have the following table, which contains two datapoints we observed from the real world. Our training data.

| Datapoint | Kilometers | Miles |

|---|---|---|

| 1 | 0 | 0 |

| 2 | 100 | 62.137 |

When we initialize the neuron we declare c to be a random value. For now we use c = 0.5. At this point we can have our very first training iteration. We use our second datapoint and do the math:

\( miles = 100 * 0.5 \)

\( miles = 50 \)

Based on our prediction (the result of the neurons' calculation) 100 kilometers are 50 miles. But our real world data states that the correct value is 62.137 instead. So obviously our prediction is not correct yet. Let's have a look at how we can calculate how far we're off.

The equation above states that we can calculate the error based on the correct value from our observation table and the neurons' prediction.

\( error = 62.137 - 50 \)

\( error = 12.137 \)

It seems like we produced an error of 12.137. Based on this information we can now work on a better prediction. So let us make some adjustments on our neuron.

Initially we set c = 0.5 and we got a huge error. Now we adjust the parameter to be c = 0.6 and continue with our second training iteration. We start by calculating the neurons' prediction.

\( miles = 100 * 0.6 \)

\( miles = 60 \)

And continue calculating the error with the new prediction.

\( error = 62.137 - 60 \)

\( error = 2.137 \)

When we compare the two error values we see that our neuron has improved quite a lot. From predictions that are off by 12.137 to predictions with a significant smaller error of 2.137. So we do it again. We adjust the paramter c = 0.7 and calculate the new prediction.

\( miles = 100 * 0.7 \)

\( miles = 70 \)

Then we calculate the error.

\( error = 62.137 - 70 \)

\( error = -7.863 \)

But this time, when we compare the error values we see that we performed worse again!

This observation teaches us a very important lesson. The bigger the error the bigger the adjustment we need to perform. But as the error decreases we must decrease the adjustment as well.

So let us rewind the last training iteration and start over by setting our paramter to c = 0.61. We start with the neurons' prediction.

\( miles = 100 * 0.61 \)

\( miles = 61 \)

And continue with the error calculation.

\( error = 62.137 - 61 \)

\( error = 1.137 \)

Here we go! We again decreased the error to 1.137. If we continue this process we end up with a neuron that successfully learned the relationship between kilometers and miles.

Let us pause for a moment at this point and consider what we have just done. We have not just solved a problem exactly in a single step, as we often do in school mathematics or scientific work. Instead we followed a completely different approach, by improving our solution step by step to end up with the correct result.

Implementation

This code block implements the procedure from above.

#!/usr/bin/python3

class Neuron:

def __init__(self):

"""

Initialize Neuron.

"""

self.c = 0.5

self.learning_rate = 0.01

self.observation_table = [(100, 62.137)]

def train(self, epochs=5):

"""

This function trains the neuron.

"""

for e in range(epochs+1):

for km, miles in self.observation_table:

pred = km * self.c

error = miles - pred

delta = self.learning_rate * (error / km)

self.update_c(delta=delta)

if e % 50 == 0:

print(f"[ Epoch {e:4.0f}/{epochs} ] c : {self.c:0.4f} - delta : {delta:0.4f} - error : {error:0.6f}")

def update_c(self, delta=0.1):

"""

This function updates the parameter c.

"""

self.c += delta

def eval(self, kilometers=100):

"""

This function evaluates the neuron.

"""

pred = kilometers * self.c

error = (kilometers * 0.6213712) - pred

return f" > {kilometers} kilometers are {pred:0.2f} miles (error : {error:0.4f})"

if __name__ == "__main__":

neuron = Neuron()

# Evaluate the neuron without training

print("--- Neurons' prediction without any training ---\n")

print(neuron.eval(kilometers=100))

# Train the neuron

print("\n--- Training ---")

neuron.train(epochs=1000)

# Evaluate the neuron after the training

print("\n--- Neurons' prediction after the training ---\n")

print(neuron.eval(kilometers=100))To run the code copy the content and save it to a file called neuron.py. Then you can run the code with the following command.

python3 neuron.pyYou should see the following output in your terminal.

--- Neurons' prediction without any training ---

> 100 kilometers are 50.00 miles (error : 12.1371)

--- Training ---

[ Epoch 0/1000 ] c : 0.5012 - delta : 0.0012 - error : 12.137000

[ Epoch 50/1000 ] c : 0.5487 - delta : 0.0007 - error : 7.342959

...

[ Epoch 950/1000 ] c : 0.6214 - delta : 0.0000 - error : 0.000866

[ Epoch 1000/1000 ] c : 0.6214 - delta : 0.0000 - error : 0.000524

--- Neurons' prediction after the training ---

> 100 kilometers are 62.14 miles (error : 0.0006)Conclusion

We have learned a lot today! We have seen that, depending on the area, sometimes machines are better problem solvers and sometimes people are. We learned about neural networks and their building blocks. And we took a deeper look into their functionality. We did this by teaching a neuron the relationship between kilometers and miles following the human "trail and error" learning process instead of the always-correct-single-shot school mathematics way. In the end, we implemented our example in Python and thus concluded this first article.

I hope you enjoyed reading today's post. If you want to continue learning about linear classification have a look at the next part of the series where we make the step from neurons predicting a single parameter to simple classifiers.

Citation

If you found this article helpful and would like to cite it, you can use the following BibTeX entry.

@misc{

hacking_and_security,

title={Introduction to Neural Networks - Strengths and weaknesses},

url={https://hacking-and-security.cc/introduction-to-neural-networks-part-1},

author={Zimmermann, Philipp},

year={2024},

month={Jan}

}